The most prominent members of the PDP family.

This paper gives an introduction to the computer architecture commonly referred to as "minicomputers" that was the state of the art in the 1970s. Starting with an overview of relevant events prior and during this time period, this paper presents both the hard- and software that used to run these machines. It takes the DEC PDP-11 computer as an example to illustrate some of these principles.

In order to properly understand the relative importance of minicomputers, one has to place them in their historical context. Relevant events start taking place well before the first minicomputer was built:

Obviously, the pace of technical progress was quickening as compared to the era of the mainframe computers. A computer is no longer a unique piece, but they are rather manufactured on an industrial scale. Of course, this reduces the production cost for each single object and hence the prices drop. Also, several computer types produced over a certain period of time differ only slightly in their architectural design. It was IBM who - unlike DEC - first realized the importance of this concept of compatibility and made sure that all system released in the future would be backward-compatible to their System/360 platform.

During the 1970s, there were a few focal places at which important developments took place: As already mentioned, the MIT AI Lab was amongst the first to use a DEC PDP system and contributed to research, especially with their ITS - "Incompatible Time-sharing System" - operating system. With them were SAIL - Stanford AI Lab and Xerox PARC - Palo Alto Research Center, who concentrated more on user interface issues.

Computer hardware tends to come in generations that are linked to underlying technology used to built the respective hardware. The table below gives an overview of the predominant technologies in each time period:

|

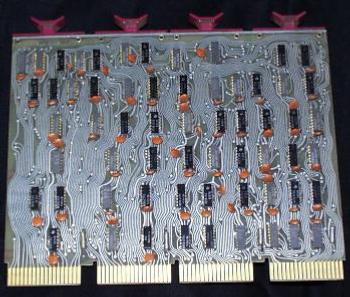

Minicomputers were built based on integrated circuits (ICs), so-called "chips". This technology of constructing logical gates was invented independently by both Jack Kilby and Robert Noyce in late 1950s. They imprinted circuit networks on isolating material and used semiconductor material - such as silicium or germanium - to take care of the actual logical operations. The advantages of integrated circuits compared to transistors were significant: They were not only smaller and faster, but also more reliable and consumed less power. Furthermore, it was now possible to automate the production of these new chips, which made them more widely available at a considerable lower price.

J. Presper Eckert, co-inventor of the ENIAC, noted in 1991: "I didn't envision we'd be able to get as many parts on a chip as we finally got." In fact, in 1964 Gordon Moore, who worked as semiconductor engineer by then and went on to co-found Intel four years later, observed that the complexity of integrated circuits doubles every year (Moore's Law). This bold statement held until the late 1970s, at which point the development speed slowed down to double complexity every 18 months.

Digital Equipment Corporation - DEC for short - was the leader in the market of minicomputers, both financially and technologically speaking. Their most succesful line of products was the PDP series, PDP standing for Programmed Data Processor. As already mentioned, these computers were relatively cheap with prices ranging in the ten-thousands of dollars, this fact making them quite popular amongst universities.

Furthermore, they introduced interactive computing, meaning that for the first time the user was given a direct feedback while doing his work. Up until then, programs were usually entered on punch cards and the result of the computation was eventually printed out on other punch cards. The minicomputers used the line printer and later introduced the cathode-ray-tube (CRT) or monitor as a way of giving quick feedback to the user. As multiple terminals (combinations of input and output devices) were attached to the same main unit, a need arose to handle the input, output and processing of all the terminals simultaneously. This is why minicomputers, such as the PDP-11, came equipped with a time-sharing operating system capable of handling multiple task at the same time.

The DEC PDP-11 was the successor of the PDP-10 and the predecessor of the VAX-11. Just as the PDP-10 it was delivered with the TOPS-10 (Time-sharing OPerating System) operating system and the MACRO-10 assembler. At the MIT an own operating system called ITS (Incompatible Time-sharing System) was developed.

The main hardware features of the PDP-11 were:

Because of the UNIBUS architecture, it was possible to built the components of the PDP-11 in a modular way. This had the additional benefit that the system could be refitted with numerous extensions, thus enhancing its overall capabilities. Below is a list of some of the available extensions:

The most important single piece of software that ran on these machines was, of course, the operating system. Up until the early 1970s operating systems were written in machine specific, tight assembler code. This was considered necessary because only by hand-coding the innermost loops it was possible to achieve the optimal performance of the machine. The drawback of this approach obviously was that the code could not be reused, if another architecture differed in just the slightest way. While IBM did quite a good job at keeping things compatible, DEC wasn't very concerned about this issue. In fact, even within the PDP series the machine designs were quite different, for example the length of a word in memory would vary between 7 and 16 bits as time went on.

In 1969 Bell Labs started work on a new kind of operating system which was supposed be called "Multics". Partly based on MIT's ITS, the main goal was to hide the complexity of the computer from programmers as well as users. However, this project was canceled due to disagreements between Bell Labs and their associates.

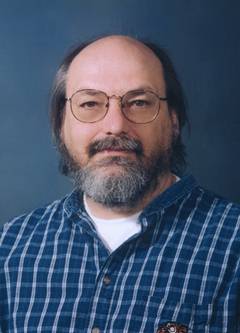

Fortunately, Ken Thompson, who was in the team that first worked on Multics, implemented parts of the project and some of his own ideas on a salvaged PDP-7 using the B programming language. He calls the resulting system "Unix". Roughly at the same time Dennis M. Ritchie develops the C programming language which has some of its roots in B that was used to first implement Unix. During the time from 1972 to 1974 Ken Thompson reimplements Unix in C constantly improving the system. A first version of Unix is presented at a symposium on operating system principles at Purdue University in 1973.

Ken Thompson |

Dennis M. Ritchie |

The Unix operating system and the C programming language broke with the old principle of system level programming which was supposed only to be done in assembler. This new approach, while not achieving the same performance initially, had another huge advantage: Once the C compiler had been modified to produce machine code for a new architecture, all programs written until then could easily be recompiled and would run on the new platform without major problems. This was impossible to do with assembly code.

The concept of making software portable between different platforms eliminated the need to start working from scratch again for each new architecture. Unix, being the first portable operating system, was immensely succesful and already by 1975 it ran on several different platforms including IBM System/370, Honeywell 6000 and Interdata 8/32. Also, it was now possible to easily port common tools to all platforms. Taken together with the standardized interface of system calls through which Unix gives access to the machine, these two factors contributed considerable to making programming much easier than it was ever before.

The world of computers has dramatically changed during the 1970s. Starting off in the world of the mainframes of the 1950s and 1960s, only 10 years later not only the hardware has changed considerably, also some new concepts have rendered old assumptions obsolete: It now is important that systems are compatible to each other, interactivity is a prerequisite, and as far as software is concerned portability is the new way to go.

Yet the 1970s should be considered just a transitional phase. As the technology advances even more rapidly, it becomes obvious that soon enough new ideas will be challenging these assumptions once again. The PC desktop revolution is just around the corner.